The Data Traffic Bottleneck

If you haven't heard about the data traffic bottleneck, it's only a matter of time until you hear about it quite a lot. Until recently, it's only been discussed in tech circles, but the implications affect all of us.

If you haven’t heard about the data traffic bottleneck, it’s only a matter of time until you hear about it quite a lot. Until recently, it’s only been discussed in tech circles, but the implications affect all of us.

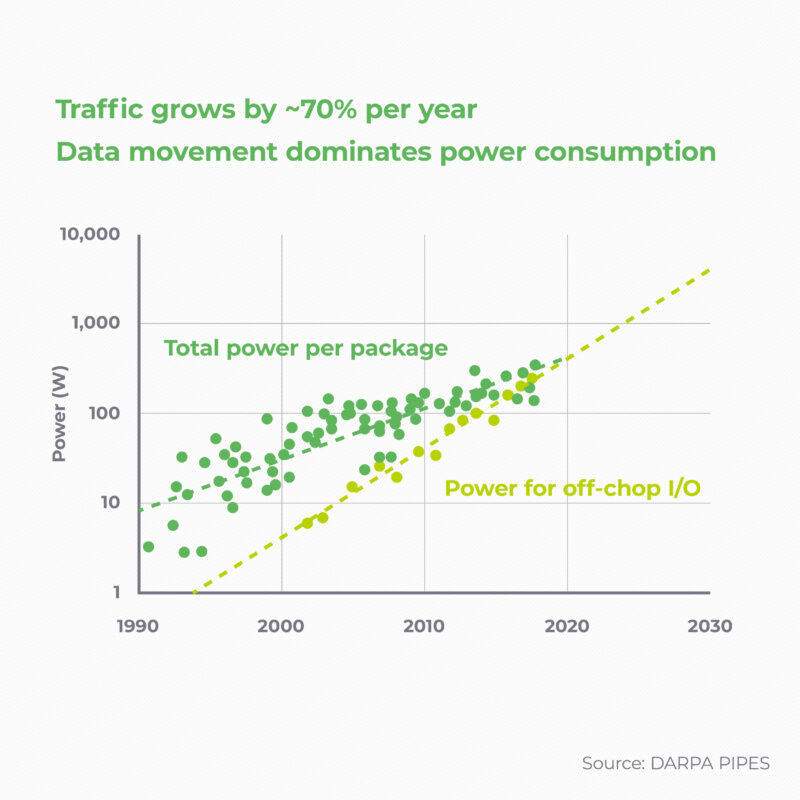

In short, global demand for data is increasing and so too are the processing requirements for that data. However, the I/O has not been keeping pace. If things continue at the same rate with the same disparity, there will be a serious crunch in a few years.

But it’s not all gloom and doom, so don’t panic. There are solutions emerging – including those by Kandou – that are helping to make sure that we’re all ready to embrace a high-speed, data-ready world.

What is the data traffic bottleneck?

Ever since the first days of computing technology, there have been some simple thresholds limiting the potential of any given system, namely the data available to that device and its ability to process that data. Over time, processing capacity has increased exponentially, allowing the average person – and the average business – to do extraordinary things. To say nothing of the industries and organisations at the bleeding edge of computing and data use.

Both processing capacity and demand for data have been accelerating, but not at the same rate. As data now becomes mindbogglingly large and complex, and demand for that data goes through the roof, the machines handling it are struggling to keep up. Basically, we want to funnel more data through our systems than they will soon be able to handle. Which is creating an IO bottleneck.

When will the bottleneck arrive?

That’s a tricky thing to answer, over the past five years, the size of AI networks has witnessed an explosive growth rate of 10x per year. As the scale of AI expands, it becomes economically and technically challenging, and in some instances, infeasible. AI models on the scale of GPT-3, with its 175 billion nodes, and GPT-4 with its >1 trillion nodes are leading to the bottleneck arriving around the time we get to GPT-5.

What is causing it?

In a word, data centres. AI and ML are driving a data bonanza, and making bigger and higher performance processors that can keep up is a serious technological challenge.

Servers at the sharp end of this growth, such as those used in analytics, high performance computing, and cloud applications, especially those with deep learning models, already have a significant IO overhead. So in order to keep up, demand is needed to split out the computation into specialized hardware. it’s

AI workloads are often highly parallelizable which means they can take advantage of parallel processing techniques to improve their processing performance. But as soon as you bottleneck the availability of that data, all those potential gains go up in smoke.

Many AI applications rely on GPUs for acceleration, but the IO bottleneck limits the speed at which data can be transferred between the GPU and the servers’ memory and storage systems. This also leads to degraded performance and increased training and inference times. In other words, the quality of the data and the speed at which it is accessed go down.

From streaming games to making electronic payments, to video business meetings, data movement underpins everything we do. So, it seems sensible that we put preparations in place sooner rather than later.

What should we be doing?

If we want to keep the bottleneck at bay, widening and ideally removing it, we need to make sure our heavyweight computation systems are able to push back against the performance degradation and delays when talking to each other.

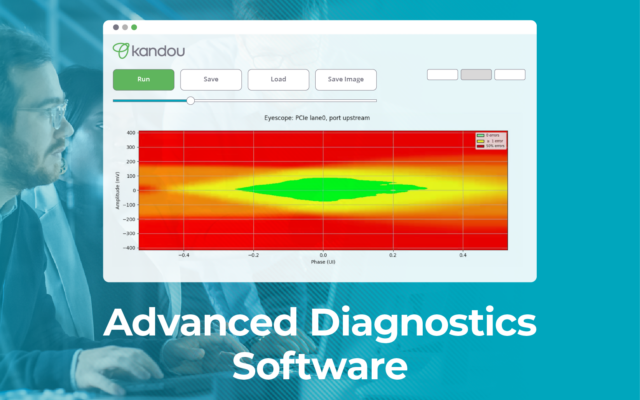

That means low latency and high bandwidth solutions that are targeted to address that specific issue. These kind of chips – like Kandou’s Regli™ PCIe retimer – will dramatically improve the performance of AI too.

While there are lots of high-speed interfaces out on the market, the real hero of the hour is going to be heterogenous computing, or more specifically PCIe CXL. PCIe CXL allows for memory expansion and pooling. Instead of a singular monolithic approach to memory, a heterogenous approach like CXL ensures that computing, memory, and storage can be arranged in the best way to tackle a given load. In other words, applications can talk to each other efficiently. If there is enough consensus in exploiting the potential that CXL and PCIe hold together, with low latency / high integrity chips we can supercharge the hardware and make sure it can handle what’s coming down the pipeline.

With a bit error rate of just 1 in 10bn, Kandou’s Regli, is just one small part of what will ultimately be a large, industry-wide solution. But as long as these emerging heterogenous computing solutions are adopted at the same rate as data use is increasing, the experience that all end consumers have as a result will be better, not worse. And system designers the world over will be able to breathe a huge sigh of relief.