What is a retimer?

Retimers have been around since the 60s, when they were first used to help boost voice circuits over long distances. Advanced for their time, they did much the same as high-speed retimers do now – equalization, clock-data recovery, line coding, and framing. These days they do pretty much the same…

Retimers have been around since the 60s, when they were first used to help boost voice circuits over long distances. Advanced for their time, they did much the same as high-speed retimers do now – equalization, clock-data recovery, line coding, and framing.

These days they do pretty much the same job, providing plug and play conditioning for signals en route to their destination, and they are turning up in conversations around SerDes more and more. Any serializer/deserializer (SerDes) application is going to – at an extreme end – warrant some kind of enhanced reach.

In SerDes applications this job has been largely fulfilled by (mostly) analogue redrivers.

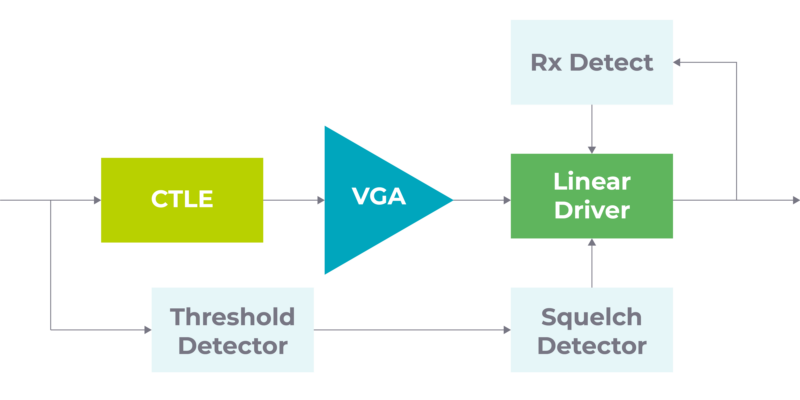

Redriver datapaths typically includes:

- A CTLE to equalize the frequency dependent loss

- A VGA to restore the amplitude of the signal

- And a linear driver to correct impedance.

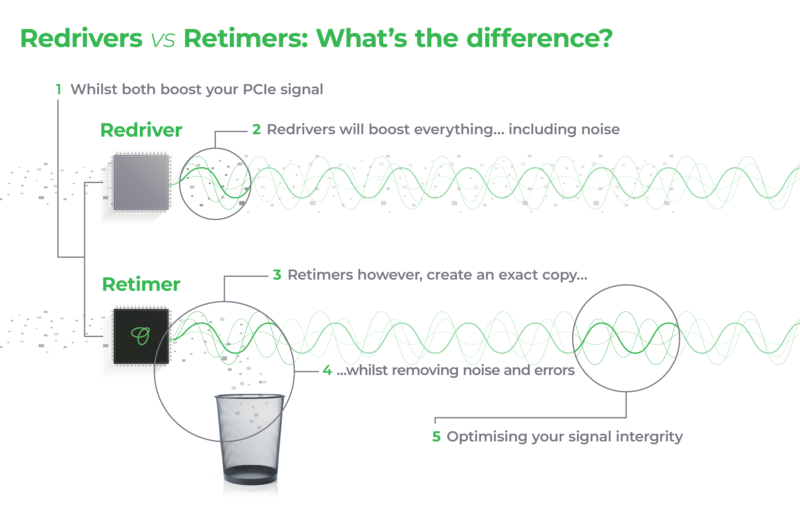

In other words, a redriver will take the signal and amplify it – noise and all.

What’s wrong with a redriver?

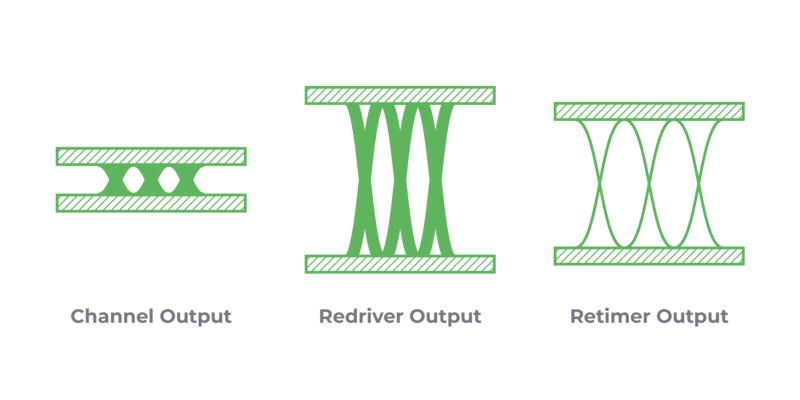

The main problem with redrivers is that noise is amplified along with signal. A high signal-to-noise ratio over the loss channel is manageable at low speeds, but the faster the speeds and greater the data load the more of a problem it becomes. There are actually three issues at play:

- The CTLE and amplifier both have their own noise floors, so the receiver is having to isolate signal from compound noise.

- Additionally, a redriver can only partially clean up inter-symbol interference. Bits get smeared out and residual ISI always leaves the signal compromised to some extent.

- Redrivers can’t restore eye width and can introduce their own jitter. As this diagram shows, you need a good eye width at the receiver if you want error-free performance. But that ‘eye’ shape is degraded by thermal noise, skew, analog mismatches, rise/fall time mismatches, termination mismatches, ISI, and any noise generated by the power supply noise.

Redrivers are simple, which is a good thing – in terms of design and budget – but they just aren’t designed to cope with these challenges past a certain point, so you can never employ the full reach of the link. Short trace lengths are inevitable and it is system developers who are left scratching their heads trying to balance all these different factors within an effective, efficient system.

The answer: retimers.

Why are retimers better?

Retimer chips mix analog and digital signals, which means they can be protocol aware. This means they don’t amplify the original, they make an exact copy – reconstructing the original signal by extracting the embedded clock, recovering all the data, and retransmitting a fresh copy with a clean clock. So it’s not a game of ‘Telephone’ where the message decays the further it goes, it’s a relay in which the signal is recreated perfectly every time.

Whatever signal the APU is sending out, the retimer will convey to the destination – crisp and clear. And because they are protocol aware they can cope with emerging standards like Compute Express Link (CXL)®.

Why does this matter right now?

Historically retimers have been useful but not essential, in fact they were never popular in OIF/Ethernet ecosystems which favored extremely tightly-engineered links. But high-speed specifications are completely changing that. The world wants more data, and it wants it faster. All the new SerDes specifications like PCIe 5.0 and USB4 are designed to address this, but they are all high-speed and very tricky to implement without a retimer – and PCIe 6.0 and 7.0 will make it harder still. Everything from 5G to gaming, aerospace, and automotive relies on PCIe connections and are in the same boat – and especially the data centers and hyperscalers trying to accelerate AI whilst coping with widespread memory access challenges.

With 8 Gbps lanes, redrivers could service PCIe 3.0 perfectly well back in 2010. When PCIe 4.0 in 2017 doubled the speed to 16 Gbps lanes, redrivers began to struggle – but they could cope.

However, since PCIe 5.0 in 2019 – the same year the specification of USB4 was announced – data lanes have been brought to 32 Gbps, and the era of the redriver is over. With 20 Gbps lanes (40 Gbps links over 2 lanes) USB4 looks pretty beefy on the surface, but that 20 Gbps signal is actually more fragile than previous iterations. This means it is more vulnerable to all those noise issues like ISI, passband ripple, jitter sources, termination mismatches, analog mismatches, intra-pair skew, reflections, thermal noise, and power supply noise. Uptake of USB4 has been slow, but it’s only a matter of time before demand for data volume and speed see USB4 take over, and retimers are obligatory on those links.

Why Kandou?

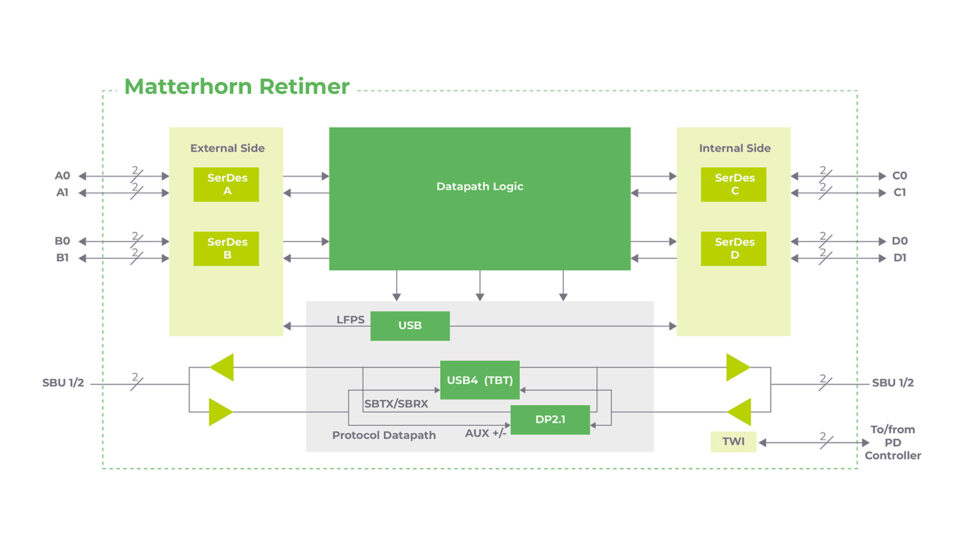

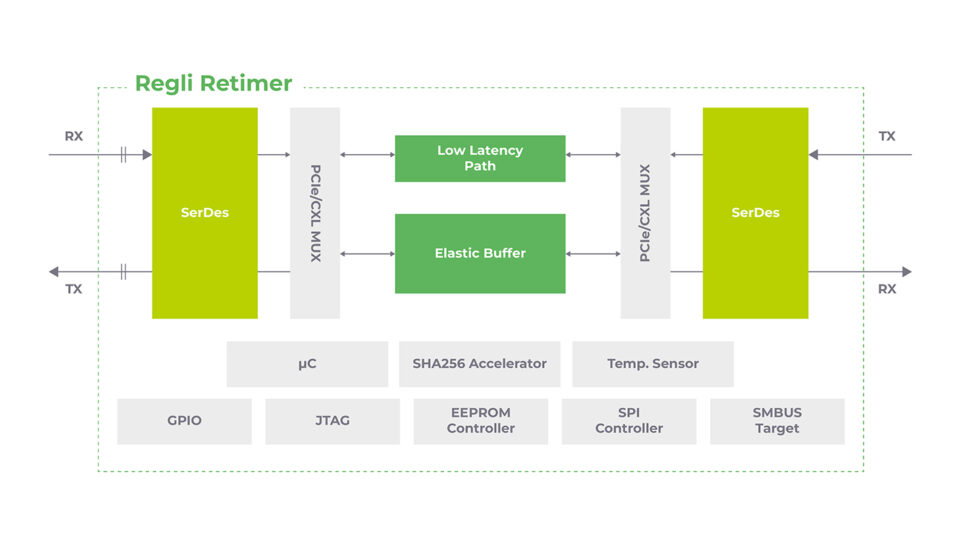

Kandou’s PCIe Regli and USB4 Matterhorn take the founding premise of a retimer’s reason to exist and push it as far as mathematically possible. The distinction between a retimer and redriver is a neat binary now, but a low-efficiency retimer will only end up holding system designers back in the future, just as redrivers are doing now.

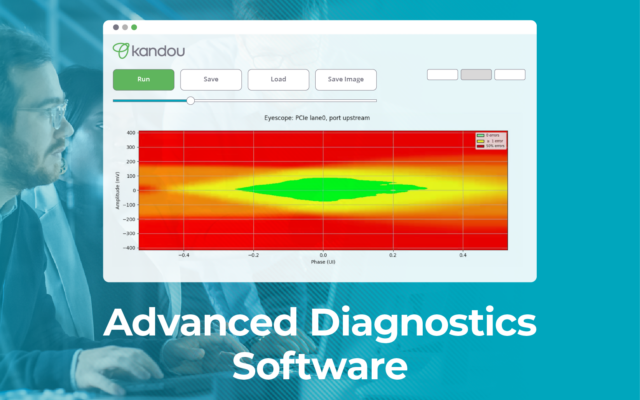

A perfect retimer connection would boost the signal with zero latency and zero error. Matterhorn and Regli provide the next best thing – ultra-low sub-10 nanoseconds latency and ultra-high reliability, 10-12 bit error rate (BER). This level of efficiency and precision is only possible because of Kandou’s grounding in advanced mathematics and aspiration to Swiss precision and absolute perfection, going beyond to optimize every single bit in the chain.

When combined with a Tier 1 supply chain and class-leading testing the result is a pair of retimers that are ready for everything asked of it by the new iterations of SerDes and the designers building the next generation of systems.